The World Quality Report 2024-25 (WQR) provides a few interesting insights into adopting AI in software testing. However, one of their recommendations regarding AI implementation is a terrible idea for most businesses.

When it comes to implementing AI for software testing, the WQR has the following key recommendations:

- Start Now: If you are not yet exploring or actively using Gen AI solutions, it’s crucial to begin now to stay competitive.

- Experiment Broadly: Don’t rush to commit to a single platform or use case. Instead, experiment with multiple approaches to identify the ones that provide the most significant benefits for your organisation

- Enhance, Don’t Replace: Understand that Gen AI will not replace your quality engineers but will significantly enhance their productivity. However, these improvements will not be immediate; allow sufficient time for the benefits to become apparent

Two of these are entirely sound, and I will look at them in more detail at the end of this article. However, recommendation 2 is only potentially appropriate for the largest corporate behemoths (those above £1 billion turnover) and is bad advice for every other company.

What Does The WQR Mean by Software Testing AI?

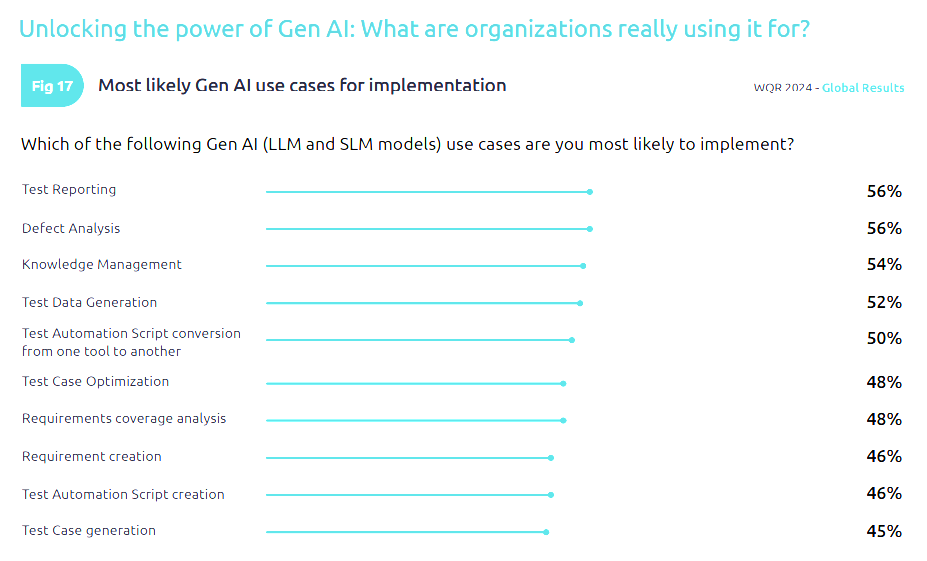

AI means many things to many people, so let’s start by looking at what it means to the authors of the World Quality Report.

While the authors don’t define Gen AI per se, page 31 does contain a list of use cases:

Three Problems With ‘Experiment Broadly’

This advice may appear reasonable at the surface level, but in reality it is only even potentially appropriate for huge businesses. In the UK, there’s probably only around 100 companies for whom this is sound advice.

An overwhelming majority of companies face incredible budget constraints, resources challenges and lack the bandwidth or expertise to carry out grandiose projects like this. QA teams even less so, given the high-pressured, time-constrained nature of software testers.

Taking this approach would eat up time and effort, impact software quality, and give AI such a bad reputation that it would be years before they gave it another go.

I still speak to people who are scarred by poorly thought-out experiments with automation in the 1990s and 2000s.

So, while the WQR’s other AI recommendations do offer valuable guidance for most companies the advice to ‘Experiment Broadly’ could hinder rather than help their AI adoption journey in software testing in a number of ways:

1. Short-Term Productivity Impact

Implementing and evaluating multiple AI solutions is extremely time-consuming and will decrease short-term productivity, as testers would need to divert their attention from their primary testing duties to learn and assess various AI tools.

This short-term disruption is too costly for most organisations that rely on quick turnarounds and efficient use of resources.

2. Difficulty in Evaluation

The WQR’s suggestion assumes that organisations can effectively evaluate the benefits of different AI approaches. Ok, so how, exactly?

How are teams meant to conduct comprehensive evaluations of multiple tools without a standardised comparison method—this is all new—or the luxury of extended trial periods?

Plus, Gen AI solutions move so fast that any comparison would likely be redundant by the time any reasonable trial was completed.

3. Immediate vs. Long-Term Benefits

The report acknowledges that “improvements will not be immediate” and advises allowing “sufficient time for the benefits to become apparent“. Sure, in a perfect world we’d, but test teams don’t work like this.

Automation started off like this, but wasn’t widely adopted by most normal businesses until it could quickly demonstrate value and justify the investment of time and resources.

Here’s a More Realistic Approach to AI

Rather than broadly experimenting with multiple AI solutions, a more practical approach is to:

- Prioritise High-Impact Areas: Identify specific testing processes that could benefit most from AI augmentation and start there. This targeted approach can yield more immediate and tangible results.

- Leverage Existing Tools: Many testing tools now incorporate AI features. Companies should explore these options first, as they can often be integrated more seamlessly into existing workflows.

- Incremental Implementation: Focus on implementing proven AI features within current tooling piecemeal. This allows for gradual adoption without overwhelming the team or disrupting existing workflows.

- Monitor developments: Look for emerging solutions that incorporate AI and adopt more mature solutions that give tangible results.

Useful Recommendations From the WQR

While the “Experiment Broadly” approach is unsuitable for most companies, the World Quality Report other key AI recommendations are realistic and beneficial for these organisations.

You Should Start Now

The report’s advice to “Start Now” if not yet exploring or actively using Gen AI solutions is sound for most companies, although this doesn’t mean diving in headfirst. Instead companies should beginning to educate themselves and identify potential areas where AI could enhance their testing processes.

Benefits of Starting Now:

- Competitive Advantage: Early adoption, even on a small scale, can give you an edge over competitors who are slower to embrace AI in testing.

- Learning Curve: Starting now allows teams to gradually build expertise in AI-assisted testing, reducing the risk of falling behind industry trends.

- Incremental Improvements: By starting small, you can see gradual improvements in their testing processes without significant disruption.

- Future-Proofing: As AI becomes more prevalent in software development, early familiarity will help you adapt more quickly to future advancements.

Enhance, Don’t Replace

The WQR’s recommendation to “Enhance, Don’t Replace” is particularly relevant for normal companies—Gen AI will not replace quality engineers but will significantly enhance their productivity.

This approach aligns well with the incremental implementation strategy, allowing companies to gradually introduce AI tools that complement their existing workforce and processes.

Key Considerations:

- Skill Augmentation: AI should be viewed as a tool to enhance the capabilities of existing quality engineers, not as a replacement for human expertise.

- Process Optimisation: Focus on using AI to streamline repetitive tasks, allowing human testers to concentrate on more complex, strategic aspects of quality assurance.

- Balanced Integration: Strive for a balance between AI-assisted testing and traditional methods, leveraging the strengths of both approaches.

- Continuous Learning: Encourage testers to adapt and evolve their skills alongside AI implementation, fostering a culture of continuous improvement.

Conclusion

While the WQR 2024-25 provides valuable insights into the growing adoption of AI in software testing, its recommendation to ‘Experiment Broadly’ is unlikely be the most practical approach for all but the biggest businesses.

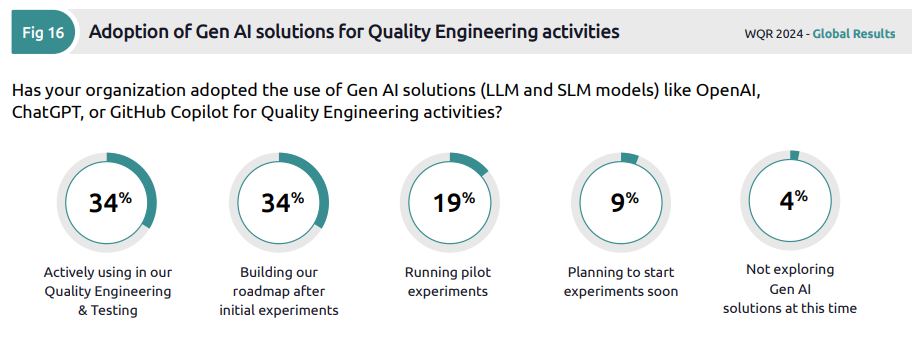

The report’s data showing that 68% of organisations are either using Gen AI or developing roadmaps after initial trials is encouraging, but it’s important to note that this is larger organisations with more resources—and only 34% of these corporate giants are actually using Gen AI in anger.

For most companies, a more measured, targeted approach to AI adoption in software testing is likely to be more effective.

By focusing on incremental improvements, leveraging existing tools, and prioritising high-impact areas, companies can reap the benefits of AI in testing without overextending their resources or disrupting their core testing activities.

As the use of AI in software testing continues to evolve, it’s crucial for businesses to stay informed and adaptable, but also to approach AI adoption in a way that aligns with their specific needs, constraints, and goals.